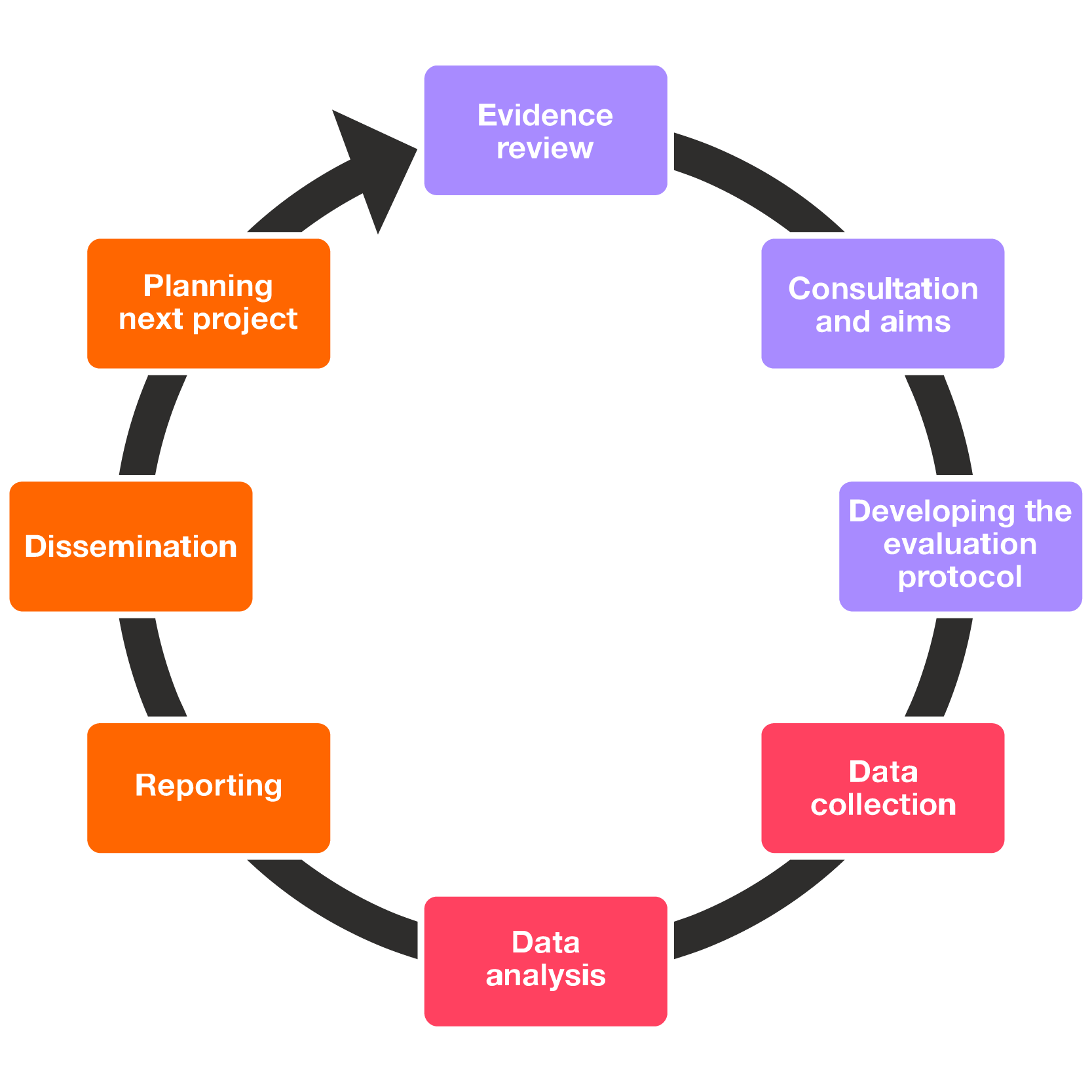

Any evaluation you undertake should be part of an iterative process, with your learning being used to help improve and develop the next phase or project. If you look at it in this way, rather than as a costly and time-consuming ‘add-on’, it becomes obvious that evaluation is an essential part of the process of delivering a project itself. The Evaluation Cycle offers an overarching framework in which to understand evaluation as this iterative process. It was developed by Willis Newson with researchers at the University of the West of England and will fit any kind of evaluation approach you adopt.

This cycle has three main phases: project planning; implementation; and reporting and dissemination. It is worth noting that the implementation phase (including data collection and analysis) is only one part of the cycle and that project planning and reporting and dissemination demand just as much, and possibly more, time and thought.

A published version of this cycle was developed by Norma Daykin, Meg Attwood and Jane Willis and its use should be credited to them wherever it is used.

What can you learn from the work of others? In any evaluation, the first step should be to find out what other evaluation and research can tell you about the likely impacts of a project like yours.

An evidence review summarises the available evidence, revealing what is known about the impacts, outcomes and delivery of similar projects. It should also tell you about what is still not known, or difficult to establish. This is all critical information for evaluation planning. It will help you to decide what to evaluate and what approach might be appropriate. It may also be useful in planning the project itself. Crucially for an arts and health evaluator with limited resources and time, an evidence review may identify research which – if relevant – can then be used to support or illuminate limited, but promising findings from a smaller scale evaluation.

Consulting with the people involved in or affected by your project when planning an evaluation will tell you how they perceive the evaluation and what information they might need from it. Consultation is particularly important at an early stage for informing both project aims and identifying appropriate evaluation aims. It can also provide an opportunity to test out ideas and methods and to discuss whether a particular kind of evaluation activity might be appropriate.

Setting aims is a crucial part of the evaluation cycle. A clear set of evaluation aims should inform the questions that the evaluation seeks to answer. Many arts and health projects have a wide range of project aims, not all of which can be easily measured, particularly given limited evaluation resources. Evaluators will need to decide which aims to focus on, based on stakeholder priorities, an understanding of what can actually be measured, and the funds and resources available.

When you have decided on your evaluation aims and questions, you can plan how you will go about answering them. An evaluation framework or protocol describes what you are interested in evaluating and how you will go about doing it. At this point you will also consider what resources you might need, how you will manage the data, what the ethical issues are, and how you are going to report on and disseminate your findings.

An outcomes framework may underpin all this planning. In arts and health terms we generally understand ‘outcomes’ to mean the changes, benefits, learning or other effects that can be attributed to a particular service or activity. These are what you are seeking to measure. An outcomes framework will clearly map each outcome to a set of indicators that will establish whether or not it has happened and a method of measurement. This is a clear and manageable way to understand how and what you will be evaluating.

The data you collect for an evaluation may come in a number of forms. It may be quantitative, including numbers from monitoring or collected through closed questions on questionnaires. This information might have been collected at the end of a project or activity, or throughout. Data can also come in the form of transcriptions of interviews or focus group discussion, open feedback, meeting minutes, photographic documentation of activities, even artworks created by participants. Things you will need to consider, whatever kind of data you collect, include how you will ensure that the confidentiality, and maybe also the anonymity of respondents and participants will be ensured, and how you are going to avoid bias. The way in which you design and administer forms and questionnaires or run interviews and focus groups can introduce bias, and will need careful thought. In addition, you will want to think about how to use sampling to make your evaluation encompass as wide a range of participants’ experience as possible.

The techniques used to analyse quantitative and qualitative data are quite different. For quantitative data you are likely to want to describe the patterns you observe in the numbers you have collected. If your analysis needs to go beyond the descriptive – and you are seeking to infer meaning from the data – it is best to seek advice. Qualitative data will generally be analysed using content or ‘thematic analysis’, a method which identifies patterns within the data, usually (but not always) participants’ words collected in interviews or focus group discussion.

You may want to explore how to collect and analyse data using arts-based methods such as film or video. Some examples of ways in which researchers have gone about doing this can be browsed from this section.

Evaluation findings need to be recorded so that they can be shared. This is usually achieved through a written report describing the project, the evaluation and highlighting what has been learned. A report is useful however brief, and sometimes brief is best. It should present a balanced account of the project, commenting on its impact, the strengths and weaknesses of its delivery and the learning that has been captured that might inform future projects.

Reporting does not have to be confined to a written document. You could explore using film, animation or infographics to bring your findings to life. The important thing to consider is who you are addressing with the report and how this audience will want to access the findings.